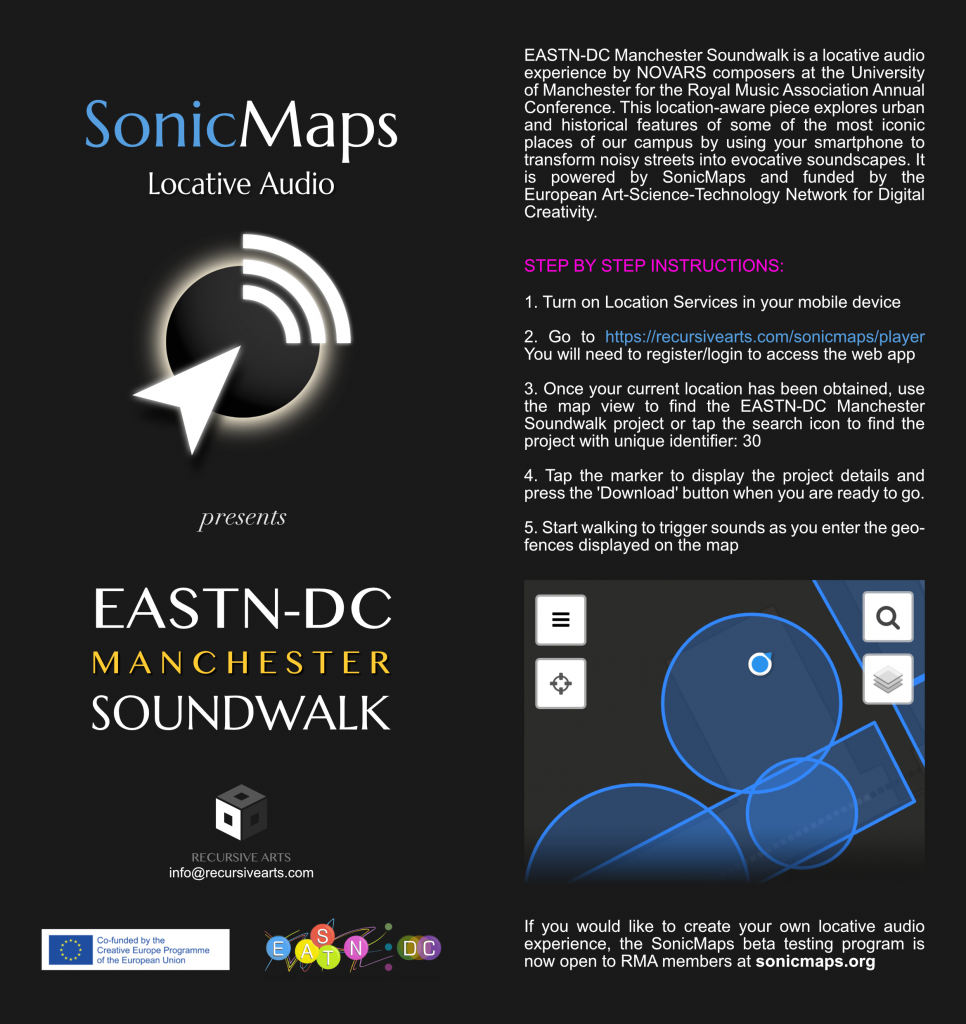

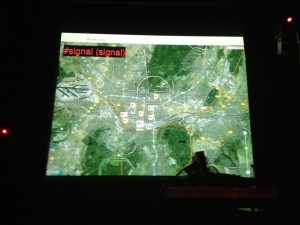

MAIA is a mixed reality simulation framework designed to materialise a digital overlay of creative ideas in synchronised trans-real environments. It has been proposed as an extension to the author’s previous research on Locative Audio (SonicMaps).

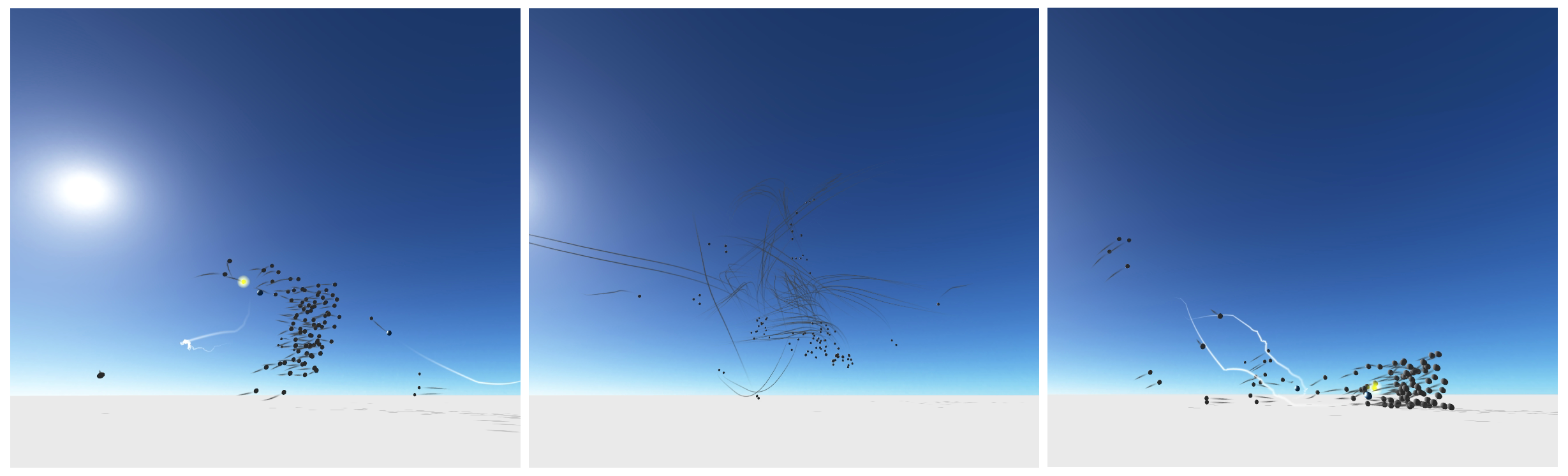

For this purpose, a number of hyper-real virtual replicas (1:1 scale) of real world locations are to be built in the form of 3D parallel worlds where user-generated content is accurately localised, persistent, and automatically synchronised between both worlds—the real and the virtual counterparts.

The focus is on fading the boundaries between the physical and digital worlds, facilitating creative activities and social interactions in selected locations regardless of the ontological approach. We should thus be able to explore, create, and manipulate “in-world” digital content whether we are physically walking around these locations with an AR device (local user), or visiting their virtual replicas from a computer located anywhere in the world (remote user). In both cases, local and remote agents will be allowed to collaborate and interact with each other while experiencing the same existing content (i.e. buildings, trees, data overlays, etc.). This idea somehow resonates with some of the philosophical elaborations by Umberto Eco in his 1975 essay “Travels in Hyperreality”, where models and imitations are not just a mere reproduction of reality, but an attempt at improving on it. In this context, VR environments in transreality can serve as accessible simulation tools for the development and production of localised AR experiences, but they can also constitute an end in itself, an equally valid form of reality from a functional, structural, and perceptual point of view.

Content synchronisation takes places in the MAIA Cloud, an online software infrastructure for multimodal mixed reality. Any changes in the digital overlay should be immediately perceived by local and remote users sharing the same affected location. For instance, if a remote user navigating the virtual counterpart of a selected location decides to attach a video stream to a building’s wall, any local user pointing at that physical wall with an AR-enabled device will not only watch the newly added video but might be able to comment it with its creator who will also be visible in the scene as an additional AR element.

Watch the Maia AR App Demo Video

A number of technical and conceptual challenges need to be overcome in order to meet the project’s goals, namely:

Developing appropriate abstractions (API) for the structuring and manipulation of MR objects in the MAIA server.

Modelling precise 3D reproductions of real world locations via 3D scanning, architectural plans, photographs, etc.

Finding the best Networking and Multi-Player solutions to operate within the simulation engine.

Designing reliable outdoors 3D object-recognition algorithms (computer vision).

Providing robust localisation and anchoring of digital assets in world absolute coordinates.

Enabling interactions between virtual and physical objects in AR mode.

The outcome of this research project will be made publicly available to content aggregators and general users as multiple separate apps (the MAIA client) for specifics platforms. Currently, the considered options depending on the access and navigation medium are:

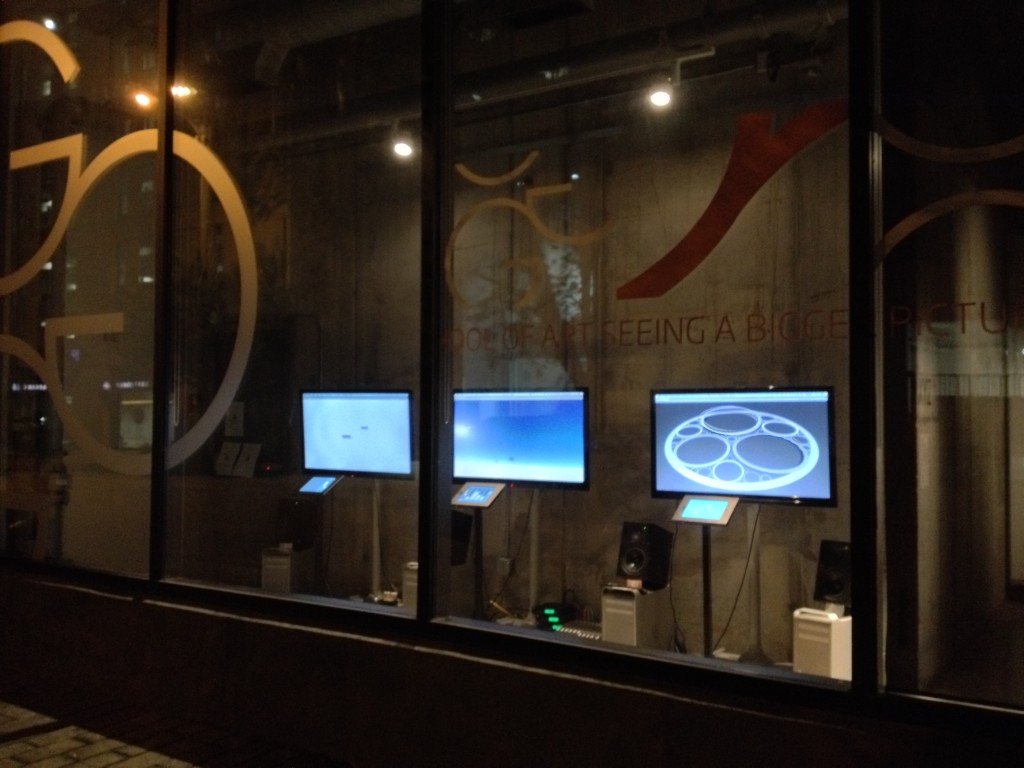

Virtual Reality Client (Virtual World Navigation)

A Unity WebGL online app to explore virtual spaces using a HTML5 compliant web browser.

Fully immersive stereoscopic rendering of virtual environments using VR headsets such as Oculus Rift or HTC Vive.

A dedicated iOS app (iPad only).

Augmented Reality Client (Physical World Navigation)

A custom AR app for Android and iOS mobile devices (smartphones, tablets, iPads).

Ideally, the Maia VR iPad app will also include an AR mode so one single app will only be required to explore local and remote locations. Virtual reality visualisations on smartphones have not been considered because of the small viewport—a large screen is recommended for optimal content editing/management in these 3D environments.

All the apps will share similar functionality as they should provide multiplayer access to selected locations and their characteristic objects and urban features (e.g. buildings, traffic signs, benches, statues, etc.). These physical objects—either visualised through a mobile device’s camera or as virtual representations in the parallel virtual world—may be used as anchors, markers or containers for our digital content (i.e. text, images, sounds, static/dynamic 3D models) and we shall be able to set privacy levels to decide who can access it. If not privacy restrictions are imposed, the new content would be added to MAIA’s public layer and it could be accessed by anyone in the MAIA network.

As a curiosity, the name MAIA was chosen considering the etymological explanation given by Indian philosophy where the word maia is interpreted as “illusion”, “magic”, or the mysterious power that turns an idea into physical reality—ideas being “the real thing”, and their physical materialisation “just an illusion” to our human eyes. In this sense, the digital overlay here proposed constitutes a world of existing ideas in the MAIA cloud, materialising in trans-real environments via virtual or augmented reality.

Dr. Ignacio Pecino

Artist-in-residence EASTN-DC Manchester

Funded by the European Arts Science Technology Network