PROCEDURAL – GENERATIVE

“Boids” (2014) – excerpt

This piece proposes a non-conventional 3D virtual instrument based on a flocking behaviour algorithm (boids), that works as a spatial/kinematic model for real-time procedural audio and the exploration of sound gesture, texture and spatialization. This model was chosen because of its AI/ emergent properties, exhibiting a minimalistic visual representation that reinforces the aural aspect of the piece. The boids algorithm was implemented in a 3D simulation environment (Unity + C#), including random/ generative elements for a non-deterministic micro-structure, and it is controlled using a set of custom control methods (API) representing multiple instrumental techniques. Each of these methods encapsulates a number code instructions (procedures) with a particular effect on the flock’s state/configuration, and can be called from a third “sequencer” script as an organised list of events (procedural composition). Sounds are generated in real time in SuperCollider using incoming spatial and physics information from the simulated flock agents (particle height, velocity, collisions, etc.). This sonification process results, among others, in rich, dynamic textures whose spectral content, density and distribution, is ultimately representative of the flock’s state.

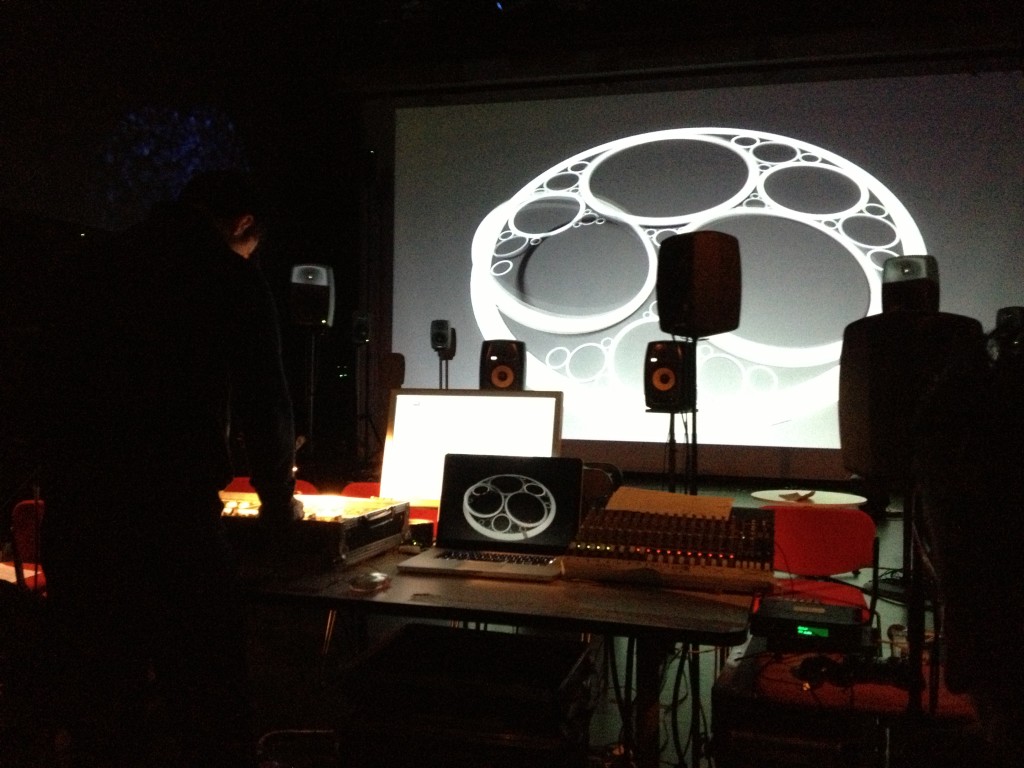

“Apollonian Gasket” – A Sound Sculpture (2014)

Exploring the kinematics of virtual objects to generate musical gesture and procedural sound. Composed using Unity(C#)+SuperCollider.

“Singularity” (2013)

This piece proposes a non-interactive linear macro-structure, where materials have been preselected and organised following a premeditated direction, just in the manner of the fixed-media tradition. However, once we examine the sound materials in detail, we can observe how most of them are being algorithmically generated in real-time using a sonification of cellular automata, producing an ever-different concatenative synthesis effect. These automata are fed by a large array of micro-sounds that have been carefully dissected from a large corpus of industrial recordings at Manchester’s MOSI museum.

Given the characteristic spatial dissemination of the cells, it is possible to achieve some kind of sound spatialization by controlling the automaton’s initial configuration which defines the particular pattern or motif.

Furthermore, the piece explores spatialization techniques for mono, stereo and quadraphonic audio-sources (stems) in a 3D virtual space and new ways to sequence sound materials using JavaScript/C# coding and event-based triggering.

This work was premiered during the MANTIS Autumn Festival 2013 and also presented in Spain at Punto de Encuentro AMEE Málaga (Nov 2013).

NEW! An online interactive demo of automata sonification can now be played here.

LOCATIVE AUDIO

“Alice: Elegy to the Memory of an Unfortunate Lady” (2012)

Imagery ©2013 Google

Interactive piece using the SonicMaps mobile app for sound geolocation (Beta Release + OSC implementation).

Based on a poetry by Alexander Pope and a urban tale about the ghost of a girl named Alice who wanders around the Park. Location: Whitworth Park (Manchester).

This piece was premiered on the “2012 Interactive AudioGame Showcase”, featuring a Unity3D virtual representation of WhitWorth Park displaying the current position of a remote user walking “live” on the real park. People in the Concert Hall can listen to the pre-composed sounds while they are being triggered by the remote user. Click here to learn more.

INTERACTIVE

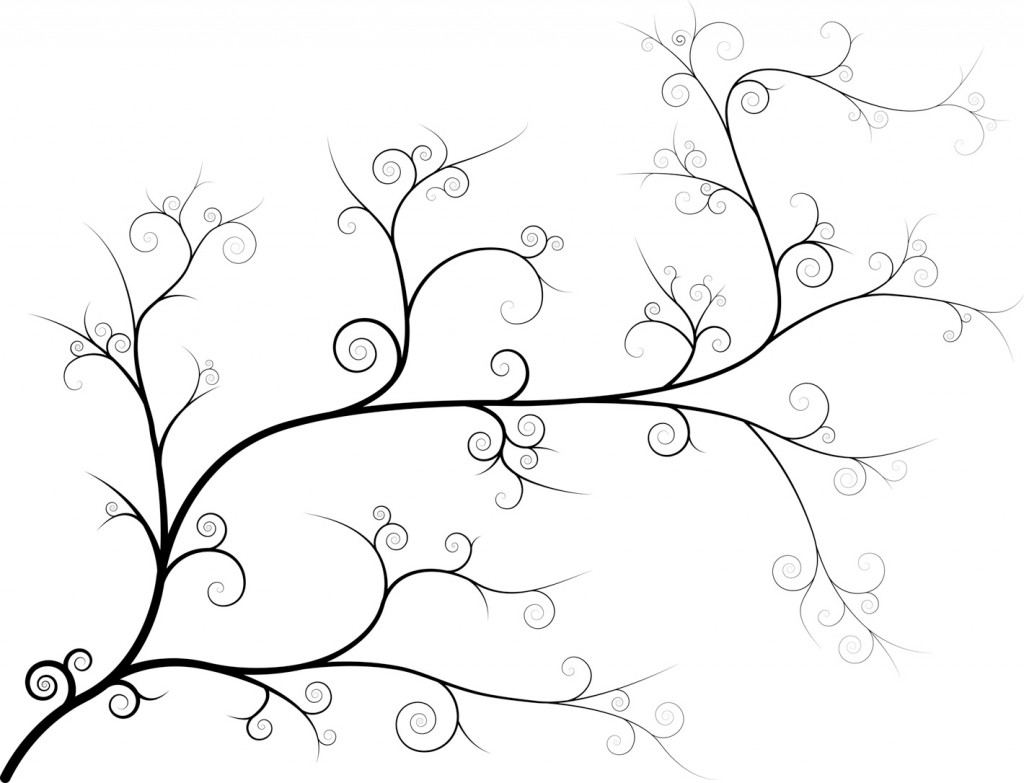

“Swirls” (2012)

Swirls digs into the possibility of multi-user interaction with an electroacoustic piece in a “concert hall situation”. It also shows a number of “hidden soundscapes” as a new interactive way of presenting sonic materials to the audience. Several technical challenges had to be solved, thus opening a whole new world of expressive and creative possibilities. E.g:

–Android (Phone, tablet, etc) to Unity3D (Game-Engine) communication: The accelerometer as a intuitive method of spatial navigation. Custom buttons, faders, etc. can be created for behavior control. Data is transferred wirelessly using the OSC protocol via OSCulator. The number of simultaneous users/interfaces is only limited by network bandwidth and complexity of routing/scripting (“Swarm intelligence” pieces are possible). Custom android app was developed using Eclipse Development Kit.

– Unity3D (Game-Engine) to MAX/MSP (Audio-Engine) communication: Spatial information within a virtual environment can be used to trigger and transform sounds running (in real time) in an external audio software like MAX/MSP. OSC data is again managed by OSCulator using a different network port.

The structure of the piece is given by a number of paths or branches drawn on the terrain of a virtual environment in Unity3D (Game-Engine). These paths are organized as a fractal configuration and contain a number of swirl shapes grouped in clusters (see figure). Each of these cluster are associated with a synthesizer instance in MAX/MSP via Ableton Live (for easier data mapping). In addition, each swirl within the cluster has its own characteristics (pitch, brightness, etc).

Swirls have a different sound depending on how fast do we “collide” with them, which user/ particle is triggering it, etc. All these characteristics are scripted using JavaScript in Unity3D and subsequently sent as OSC data through a computer network for musical interpretation.

Spatial navigation is possible thanks to a custom application for android devices (e.g. smartphones) that has been programmed to make use of the accelerometer as a very simple, intuitive and natural interface. So a number of people may interact with the piece wirelessly and simultaneously, just from their seats in the concert hall, no matter where they are.

Unlike the classical sound installation, this piece is thought to exist in the “concert hall situation”, with an approximate fixed duration but still maintaining its interactive nature. Furthermore, those in the audience not enabled with an interface will be considered as a “control group” or “listeners” who should equally enjoy the experience.

Sounds are organized in a way that their “order of surrogacy” (Smalley) is increased as we move toward the end of each path/branch. Thus, first swirls are usually linked to a FM or additive synth for simpler abstract sounds. Subsequently, we might find, for instance, a physical-modelling synthesizer with richer, more recognizable sounds.

The culmination to every chosen path is a “hidden soundscape” whose pre-recorded sounds are to be discovered by the audience. A sound will only play when at least one of the users (represented by a flying light particle) is close enough to it. I consider this is a good way to increase the level of awareness about the sounds being used in the soundscape (given that they are progressively presented as we discover them). Emergence of collaborative behaviors in the audience are expected, in order to find out the hidden nature of the musical context.

“Autoverso” (2011)

This work explores the possibilities of using “virtual space” as a mean of musical structural organization and sonic behaviour. The piece was inspired by the book “Permutation City” written by Greg Egan, which explores concepts like quantum ontology, artificial life and simulated reality. It also deals with the idea of an “Autoverse”, an artificial life simulator based on a cellular automaton complex enough to represent the substratum of an artificial chemistry, which is sonically represented in this piece. Thus, a bunch of sound quanta (particles) interact with each other, and with the listener, to build even more complex structures. Resulting spatial symmetries are not only visual but are also perceived through sound positioning in a 5.1 surround set-up, specially when our “avatar particle” is integrated in the particular structures.

This work explores the possibilities of using “virtual space” as a mean of musical structural organization and sonic behaviour. The piece was inspired by the book “Permutation City” written by Greg Egan, which explores concepts like quantum ontology, artificial life and simulated reality. It also deals with the idea of an “Autoverse”, an artificial life simulator based on a cellular automaton complex enough to represent the substratum of an artificial chemistry, which is sonically represented in this piece. Thus, a bunch of sound quanta (particles) interact with each other, and with the listener, to build even more complex structures. Resulting spatial symmetries are not only visual but are also perceived through sound positioning in a 5.1 surround set-up, specially when our “avatar particle” is integrated in the particular structures.

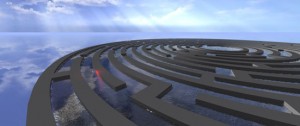

“Laberinto” (2011)

“Laberinto” is a prototype for a spatial-sonic navigation tool, developed using a game engine technology , specifically, the Unity 3D Game Engine (although other options are also available). A “circular maze” scene is then proposed and a number of sonic spheres are displayed throughout the place, each of them containing a particular and characteristic sound which is played whenever the user gets close to it. Sounds are distributed in space according to their spectromorphological properties (note the graphic squeme), thus helping the user to find his path to the center of the maze. In addition, some pre-composed sections can be found in several strategic locations, contrasting with the visual monotony of the maze, and offering a more conventional electroacoustic miniature. In the given video example a “morphing” cube is displayed and sounds try to emphasize its “gestural” and spatial qualities. However, these pre-composed material could be also given a certain degree of interactivity in a future development. Once the user gets to the centre of the maze, a visual and sonic reward is achieved when a “whole” view of the maze is shown, while sounds are now reproduced simultaneously instead of linearly (in time). Therefore, the focus is now on the textural quality of the mix instead of sequential relationships.

“Laberinto” is a prototype for a spatial-sonic navigation tool, developed using a game engine technology , specifically, the Unity 3D Game Engine (although other options are also available). A “circular maze” scene is then proposed and a number of sonic spheres are displayed throughout the place, each of them containing a particular and characteristic sound which is played whenever the user gets close to it. Sounds are distributed in space according to their spectromorphological properties (note the graphic squeme), thus helping the user to find his path to the center of the maze. In addition, some pre-composed sections can be found in several strategic locations, contrasting with the visual monotony of the maze, and offering a more conventional electroacoustic miniature. In the given video example a “morphing” cube is displayed and sounds try to emphasize its “gestural” and spatial qualities. However, these pre-composed material could be also given a certain degree of interactivity in a future development. Once the user gets to the centre of the maze, a visual and sonic reward is achieved when a “whole” view of the maze is shown, while sounds are now reproduced simultaneously instead of linearly (in time). Therefore, the focus is now on the textural quality of the mix instead of sequential relationships.

ACOUSMATIC

“Slooshing Burguess” (2011) – excerpt

“Slooshing Burgess” is an imaginary sonic trip to Anthony Burgess mind, where subtle references to his life and work are explored. Slooshy is the Nadsat (Burgess invented language) word for “hear”, thus suggesting an acoustic exploration of such a perfect clockwork of thoughts, believes and passions; in other words, the mind of a true humanist and genius who offered us a rare, objective and neutral image of human nature. This piece was commissioned by the International Anthony Burguess Foundation in Manchester (UK) and premiered on the 29th March 2011. The following sample is a stereo downmix excerpt from the original 4 channels composition:

“Slooshing Burgess” is an imaginary sonic trip to Anthony Burgess mind, where subtle references to his life and work are explored. Slooshy is the Nadsat (Burgess invented language) word for “hear”, thus suggesting an acoustic exploration of such a perfect clockwork of thoughts, believes and passions; in other words, the mind of a true humanist and genius who offered us a rare, objective and neutral image of human nature. This piece was commissioned by the International Anthony Burguess Foundation in Manchester (UK) and premiered on the 29th March 2011. The following sample is a stereo downmix excerpt from the original 4 channels composition:

“Branches” (2011) – excerpt

Branches” explores time as cycles in the growth patterns of an acousmatic tree. In addition, a hidden poetry emerges from its leaves when air passes through, suggesting an abstract narrative layer. Inspired on a lithuanian poem about a mother and her son.

“Khaos” (2009)

Khaos is a quadraphonic acousmatic piece that follows a classical ternary form (A-B-A) . First and third sections, were built using several synthesis techniques, trying to emphasize abstract structural relationships within timbre and space. In contrast, second section is a hyper-realistic sound-scape where semantic interpretations easily emerge through source bonding processes (although structural parameters remain the same). Two distant places are suggested in this sound-scape, both with a common political background. In other words, sounds of power and destruction reflecting “chaos” as an unavoidable end to every living system (entropy growth). Analogous forces lead the piece to a final chaotic situation of “inexpressive equilibrium”, where silence is the only logical continuation.

Two distant places are suggested in this sound-scape, both with a common political background. In other words, sounds of power and destruction reflecting “chaos” as an unavoidable end to every living system (entropy growth). Analogous forces lead the piece to a final chaotic situation of “inexpressive equilibrium”, where silence is the only logical continuation.

AUDIO-VISUAL

“Adi Shakti” (2007)

Adi Shakti is a concrete music electroacustic composition, created from a single 5 seconds sound. Its global form metaphorically imitates the birth and death of the universe as one of its infinite renewals. Adi Shakti is a Hindu concept of the ultimate Shakti, the ultimate feminine power inherent in all Creation. All video material was equally created from a single picture taken from the audio’s spectrum (sonogram).

INSTRUMENTAL

“Granado/2” (2010)

Aria for Tenor (based on a poem by Francisco Ruiz Noguera). Commissioned by the “Orquesta Provincial de Málaga”.

“Piano Sonata” (2008)

Performed by Juan Ignacio Fernández